AI Recognition tool - Outstanding Design Award

Overview

With AI-generated content becoming more common, detecting it in long, structured documents—like essays or legal contracts—has become a major challenge. Existing tools often fall short, offering vague scores without explaining which parts are AI-written or why.

In March 2025, I joined the AI Recognition Tool Design Challenge during the University of Maryland Info Challenge, a week-long competition focused on designing a solution to this problem.

Our goal: build a tool that could accurately and transparently detect AI content in complex documents.

As a two-person team, we used a rapid design sprint to research, ideate, prototype, and test our solution—earning the Outstanding Design Award for its innovation and usability.

Categories

UX Design

Interaction Design

Design Sprint

Date

March 2025

Client

MindPetal

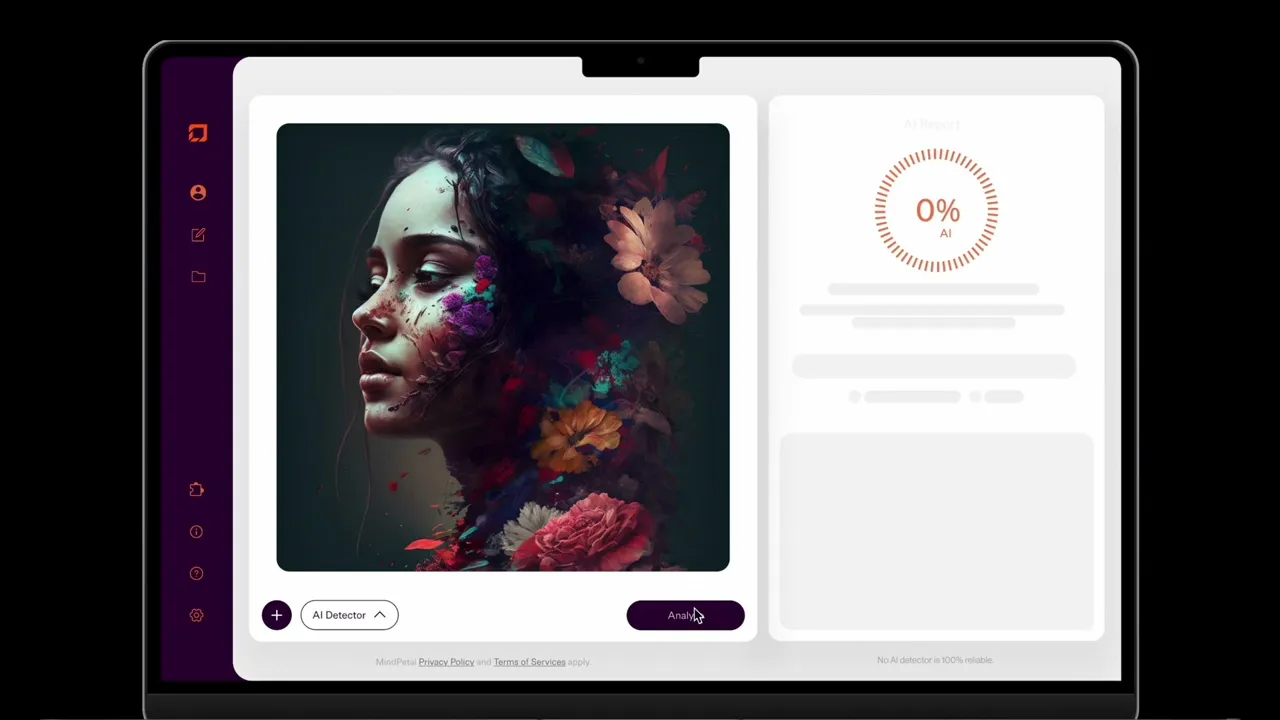

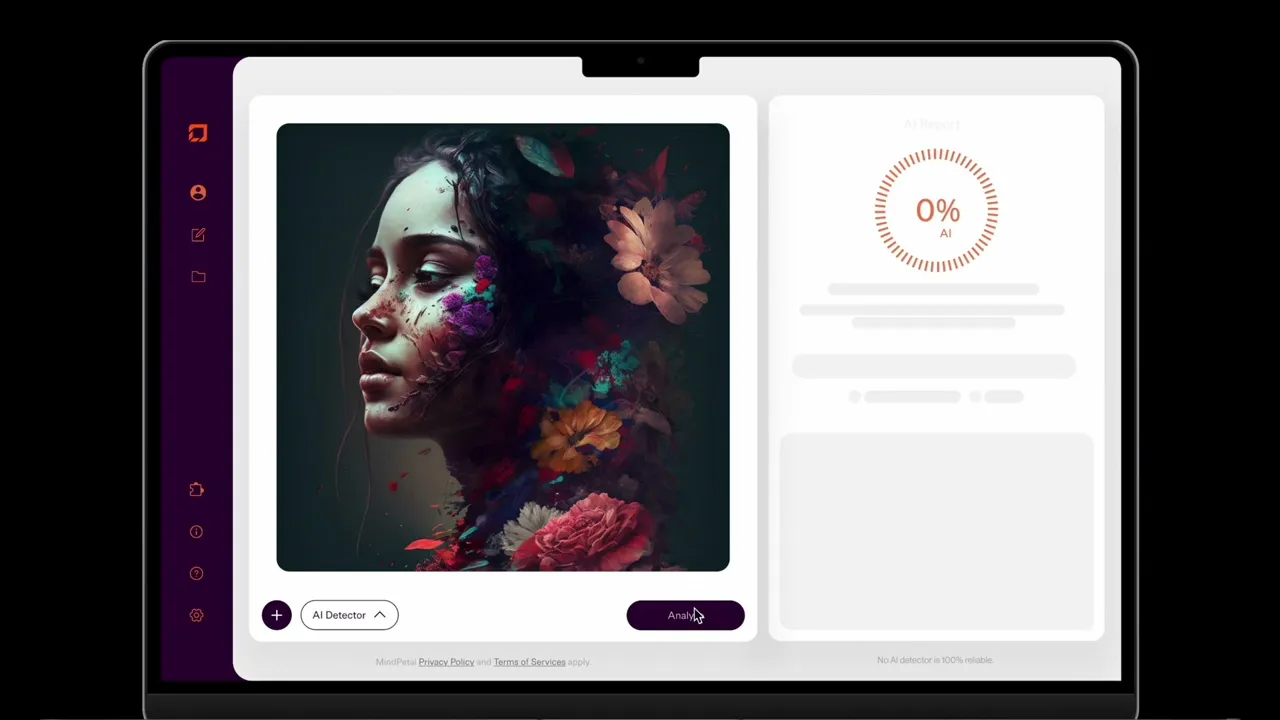

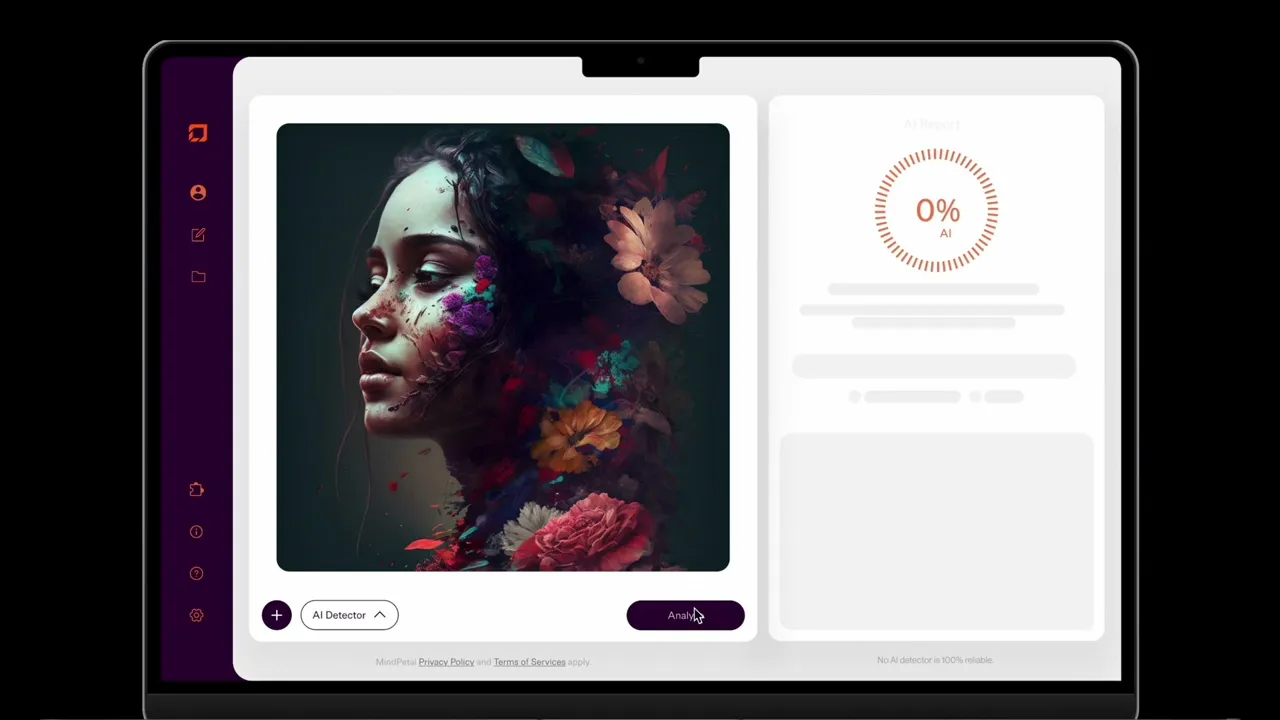

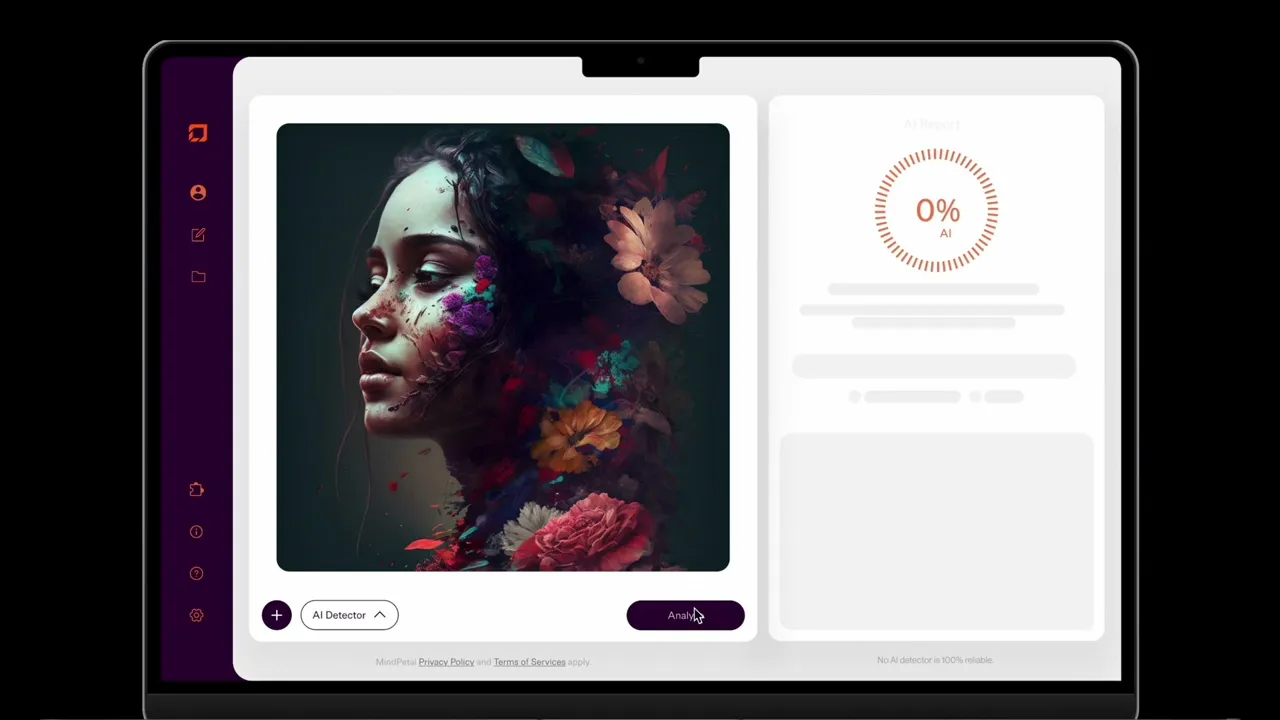

Prototype video demo

Here's a quick walkthrough of the design and features of the AI Recognition tool!

My Role & Impact

As the UX/UI Designer and Researcher, I led the end-to-end design process for this project. I conducted user interviews, built detailed personas, and facilitated ideation workshops using methods like Crazy 8s. I created low- and high-fidelity wireframes, developed the Figma prototype, and ensured the interface was intuitive, accessible, and aligned with WCAG standards. I also performed A/B testing to validate design decisions and collaborated closely with my teammate to transform technical AI detection outputs into a clear, human-centered experience.

Design Sprint

Design process

Map: Research & Understanding the Problem

Our first step was Mapping, where we focused on understanding the problem thoroughly. We knew that AI content detection was a complex and emerging challenge, so the key was to dive deep into existing AI content checkers. We spent time researching how these tools work, their strengths, limitations, and most importantly, where they fell short when applied to longer, more complex documents. This research gave us valuable insights into what was missing in current solutions and helped us identify opportunities for improvement.

By analyzing various tools, we pinpointed the key features that were necessary for an effective AI content checker. These features included transparency in AI detection, the ability to analyze large documents, and providing actionable results rather than just a vague percentage score. We also explored how the user interface and experience could be simplified and made more accessible for users with varying levels of technical expertise.

After gathering insights from existing tools, we took a step further by creating personas. These personas helped us understand the specific needs, frustrations, and goals of our target users.

These personas helped guide our design, ensuring it would meet real-world challenges and offer solutions that were both effective and user-friendly.

Professor James Carter needed a fast and reliable way to analyze student essays for AI-generated content. His frustration with existing tools was the lack of transparency, where tools often provide a probability score without explaining why the content is flagged as AI-generated or which parts are most likely AI-written.

Sarah Reynolds needed a tool that could handle long, structured legal documents, offering detailed metadata to help verify their authenticity. She was frustrated by existing tools that didn’t process complex legal documents efficiently or provide enough detail to distinguish AI content accurately.

Sketch: Exploring Ideas and Concepts

In the Sketch phase, we generated multiple ideas through sketching and ideation exercises like Crazy 8s. After brainstorming, we voted on the best concepts to move forward with, narrowing down our options and selecting the strongest ideas to develop further.

Rapid ideation through Crazy 8s.

Decide: Choosing the Best Approach

In the Decide phase, we faced the challenge of selecting the most effective design direction, especially since we were a team of just two. We had experimented with numerous layouts and structures, refining our designs through multiple iterations to achieve the best user experience. To avoid bias towards our own preferences, we conducted A/B testing with 6 different users, showing them the basic dashboard and asking them to perform tasks. Based on their feedback, we evaluated each design’s strengths and weaknesses, making final adjustments to ensure the tool was intuitive, user-friendly, and functional. This iterative process, combined with user input, helped us confidently choose the best design to move forward with.

Wireframe iterations for design.

Prototype

In the prototyping phase, we created a high-fidelity Figma design featuring a simple upload interface and a clear results dashboard. Users can drag and drop files in various formats to detect AI-generated content. The dashboard highlights flagged sections and includes metadata like origin, timestamps, and the tool used. A rewrite zone lets users edit AI-detected text, and the overall design emphasizes clarity and accessibility.

Linked video demo above!

Test: Next Steps

The Test phase is the next step we’re looking forward to, where we’ll gather feedback from real users. We plan to observe how people interact with the prototype, identify any usability issues, and iterate to improve the tool’s functionality. This phase will be crucial for refining the user experience and ensuring that the tool is as effective as possible in detecting AI-generated content.

AI Recognition tool - Outstanding Design Award

Overview

With AI-generated content becoming more common, detecting it in long, structured documents—like essays or legal contracts—has become a major challenge. Existing tools often fall short, offering vague scores without explaining which parts are AI-written or why.

In March 2025, I joined the AI Recognition Tool Design Challenge during the University of Maryland Info Challenge, a week-long competition focused on designing a solution to this problem.

Our goal: build a tool that could accurately and transparently detect AI content in complex documents.

As a two-person team, we used a rapid design sprint to research, ideate, prototype, and test our solution—earning the Outstanding Design Award for its innovation and usability.

Categories

UX Design

Interaction Design

Design Sprint

Date

March 2025

Client

MindPetal

Prototype video demo

Here's a quick walkthrough of the design and features of the AI Recognition tool!

My Role & Impact

As the UX/UI Designer and Researcher, I led the end-to-end design process for this project. I conducted user interviews, built detailed personas, and facilitated ideation workshops using methods like Crazy 8s. I created low- and high-fidelity wireframes, developed the Figma prototype, and ensured the interface was intuitive, accessible, and aligned with WCAG standards. I also performed A/B testing to validate design decisions and collaborated closely with my teammate to transform technical AI detection outputs into a clear, human-centered experience.

Design Sprint

Design process

Map: Research & Understanding the Problem

Our first step was Mapping, where we focused on understanding the problem thoroughly. We knew that AI content detection was a complex and emerging challenge, so the key was to dive deep into existing AI content checkers. We spent time researching how these tools work, their strengths, limitations, and most importantly, where they fell short when applied to longer, more complex documents. This research gave us valuable insights into what was missing in current solutions and helped us identify opportunities for improvement.

By analyzing various tools, we pinpointed the key features that were necessary for an effective AI content checker. These features included transparency in AI detection, the ability to analyze large documents, and providing actionable results rather than just a vague percentage score. We also explored how the user interface and experience could be simplified and made more accessible for users with varying levels of technical expertise.

After gathering insights from existing tools, we took a step further by creating personas. These personas helped us understand the specific needs, frustrations, and goals of our target users.

These personas helped guide our design, ensuring it would meet real-world challenges and offer solutions that were both effective and user-friendly.

Professor James Carter needed a fast and reliable way to analyze student essays for AI-generated content. His frustration with existing tools was the lack of transparency, where tools often provide a probability score without explaining why the content is flagged as AI-generated or which parts are most likely AI-written.

Sarah Reynolds needed a tool that could handle long, structured legal documents, offering detailed metadata to help verify their authenticity. She was frustrated by existing tools that didn’t process complex legal documents efficiently or provide enough detail to distinguish AI content accurately.

Sketch: Exploring Ideas and Concepts

In the Sketch phase, we generated multiple ideas through sketching and ideation exercises like Crazy 8s. After brainstorming, we voted on the best concepts to move forward with, narrowing down our options and selecting the strongest ideas to develop further.

Rapid ideation through Crazy 8s.

Decide: Choosing the Best Approach

In the Decide phase, we faced the challenge of selecting the most effective design direction, especially since we were a team of just two. We had experimented with numerous layouts and structures, refining our designs through multiple iterations to achieve the best user experience. To avoid bias towards our own preferences, we conducted A/B testing with 6 different users, showing them the basic dashboard and asking them to perform tasks. Based on their feedback, we evaluated each design’s strengths and weaknesses, making final adjustments to ensure the tool was intuitive, user-friendly, and functional. This iterative process, combined with user input, helped us confidently choose the best design to move forward with.

Wireframe iterations for design.

Prototype

In the prototyping phase, we created a high-fidelity Figma design featuring a simple upload interface and a clear results dashboard. Users can drag and drop files in various formats to detect AI-generated content. The dashboard highlights flagged sections and includes metadata like origin, timestamps, and the tool used. A rewrite zone lets users edit AI-detected text, and the overall design emphasizes clarity and accessibility.

Linked video demo above!

Test: Next Steps

The Test phase is the next step we’re looking forward to, where we’ll gather feedback from real users. We plan to observe how people interact with the prototype, identify any usability issues, and iterate to improve the tool’s functionality. This phase will be crucial for refining the user experience and ensuring that the tool is as effective as possible in detecting AI-generated content.

AI Recognition tool - Outstanding Design Award

Overview

With AI-generated content becoming more common, detecting it in long, structured documents—like essays or legal contracts—has become a major challenge. Existing tools often fall short, offering vague scores without explaining which parts are AI-written or why.

In March 2025, I joined the AI Recognition Tool Design Challenge during the University of Maryland Info Challenge, a week-long competition focused on designing a solution to this problem.

Our goal: build a tool that could accurately and transparently detect AI content in complex documents.

As a two-person team, we used a rapid design sprint to research, ideate, prototype, and test our solution—earning the Outstanding Design Award for its innovation and usability.

Categories

UX Design

Interaction Design

Design Sprint

Date

March 2025

Client

MindPetal

Prototype video demo

Here's a quick walkthrough of the design and features of the AI Recognition tool!

My Role & Impact

As the UX/UI Designer and Researcher, I led the end-to-end design process for this project. I conducted user interviews, built detailed personas, and facilitated ideation workshops using methods like Crazy 8s. I created low- and high-fidelity wireframes, developed the Figma prototype, and ensured the interface was intuitive, accessible, and aligned with WCAG standards. I also performed A/B testing to validate design decisions and collaborated closely with my teammate to transform technical AI detection outputs into a clear, human-centered experience.

Design Sprint

Design process

Map: Research & Understanding the Problem

Our first step was Mapping, where we focused on understanding the problem thoroughly. We knew that AI content detection was a complex and emerging challenge, so the key was to dive deep into existing AI content checkers. We spent time researching how these tools work, their strengths, limitations, and most importantly, where they fell short when applied to longer, more complex documents. This research gave us valuable insights into what was missing in current solutions and helped us identify opportunities for improvement.

By analyzing various tools, we pinpointed the key features that were necessary for an effective AI content checker. These features included transparency in AI detection, the ability to analyze large documents, and providing actionable results rather than just a vague percentage score. We also explored how the user interface and experience could be simplified and made more accessible for users with varying levels of technical expertise.

After gathering insights from existing tools, we took a step further by creating personas. These personas helped us understand the specific needs, frustrations, and goals of our target users.

These personas helped guide our design, ensuring it would meet real-world challenges and offer solutions that were both effective and user-friendly.

Professor James Carter needed a fast and reliable way to analyze student essays for AI-generated content. His frustration with existing tools was the lack of transparency, where tools often provide a probability score without explaining why the content is flagged as AI-generated or which parts are most likely AI-written.

Sarah Reynolds needed a tool that could handle long, structured legal documents, offering detailed metadata to help verify their authenticity. She was frustrated by existing tools that didn’t process complex legal documents efficiently or provide enough detail to distinguish AI content accurately.

Sketch: Exploring Ideas and Concepts

In the Sketch phase, we generated multiple ideas through sketching and ideation exercises like Crazy 8s. After brainstorming, we voted on the best concepts to move forward with, narrowing down our options and selecting the strongest ideas to develop further.

Rapid ideation through Crazy 8s.

Decide: Choosing the Best Approach

In the Decide phase, we faced the challenge of selecting the most effective design direction, especially since we were a team of just two. We had experimented with numerous layouts and structures, refining our designs through multiple iterations to achieve the best user experience. To avoid bias towards our own preferences, we conducted A/B testing with 6 different users, showing them the basic dashboard and asking them to perform tasks. Based on their feedback, we evaluated each design’s strengths and weaknesses, making final adjustments to ensure the tool was intuitive, user-friendly, and functional. This iterative process, combined with user input, helped us confidently choose the best design to move forward with.

Wireframe iterations for design.

Prototype

In the prototyping phase, we created a high-fidelity Figma design featuring a simple upload interface and a clear results dashboard. Users can drag and drop files in various formats to detect AI-generated content. The dashboard highlights flagged sections and includes metadata like origin, timestamps, and the tool used. A rewrite zone lets users edit AI-detected text, and the overall design emphasizes clarity and accessibility.

Linked video demo above!

Test: Next Steps

The Test phase is the next step we’re looking forward to, where we’ll gather feedback from real users. We plan to observe how people interact with the prototype, identify any usability issues, and iterate to improve the tool’s functionality. This phase will be crucial for refining the user experience and ensuring that the tool is as effective as possible in detecting AI-generated content.